January 2, 2026: By Peter McAliney. For decades, automation promised efficiency—machines replacing humans in repetitive, rule-based tasks. But today, efficiency alone is not enough. Organizations operate in a world defined by volatility, complexity, and relentless change. What they need is adaptability, creativity, and trust. That’s why the conversation must shift from automation to human–AI partnership—a model where technology amplifies human judgment rather than replaces it.

Figure1: The Human-AI Partnership

Unlike traditional automation, which runs on static rules, partnership thrives on dynamic decision-making. AI brings predictive analytics and computational scale; humans contribute intuition, creativity, and ethical oversight. Together, they form a continuous learning loop: humans train AI, AI generates insights, and humans refine strategies. This feedback cycle accelerates improvement and ensures technology evolves alongside human priorities. Most importantly, partnership preserves empathy and fairness—qualities machines cannot replicate—while freeing people to focus on innovation rather than routine.

Where AI Assists—and Where Humans Must Lead

AI shines in areas where data complexity overwhelms human capacity: operational optimization, risk assessment, and customer personalization. Whether it’s managing inventory, detecting fraud, or tailoring product recommendations, AI excels at speed and scale. These tasks benefit from automation without sacrificing judgment.

But decisions rooted in values, ethics, and human impact must remain firmly human-led. Setting organizational mission, approving legal or regulatory actions, and managing people require empathy and accountability—qualities no algorithm can replicate. In these domains, AI can inform but never decide. Preserving this boundary ensures technology amplifies human judgment rather than eroding it.

Designing Collaboration for the Future of Work

To unlock the full potential of human–AI collaboration, organizations must rethink roles and workflows. Start by mapping processes to identify which tasks are AI-ready and which demand human oversight. Shift job descriptions from task execution to higher-order responsibilities like decision-making, creativity, and ethical review. Position AI as a tool for insight generation, not as a competitor.

Embedding human-in-the-loop decision points is critical. Workflows should ensure humans validate high-stakes actions and intervene when ambiguity arises. Training programs must build digital fluency so employees understand AI outputs, recognize limitations, and know when to override recommendations. Continuous feedback loops—where humans correct AI errors and feed improvements back into models—create systems that learn and adapt over time. Align incentives with collaboration rather than competition to foster a culture where AI is seen as an enabler, not a threat.

This transformation also demands new skills and mindsets: AI literacy, critical thinking, adaptive learning, and creativity. Employees must learn to question assumptions, interpret outputs, and frame problems so AI can generate meaningful solutions. These capabilities will distinguish those who use AI not just to optimize but to innovate.

Trust, Boundaries, and Accountability in an Agentic Era

As AI systems grow more autonomous, trust becomes both essential and fragile. Leaders must build confidence without fostering automation bias. That means framing AI as a decision-support tool, not a decision-maker, and maintaining human accountability for outcomes. Transparency is key: teams should understand how AI reaches its conclusions, including its assumptions, confidence levels, and data sources. Guardrails such as review protocols and override mechanisms reinforce healthy skepticism and prevent blind reliance.

Clear boundaries are non-negotiable. Humans must retain control over strategic and ethical decisions, high-stakes domains like healthcare and finance, and the setting of goals and constraints. Escalation paths and “kill switches” should be in place to halt autonomous actions when necessary. Data privacy is another critical frontier; agentic AI must not collect sensitive information without explicit human approval.

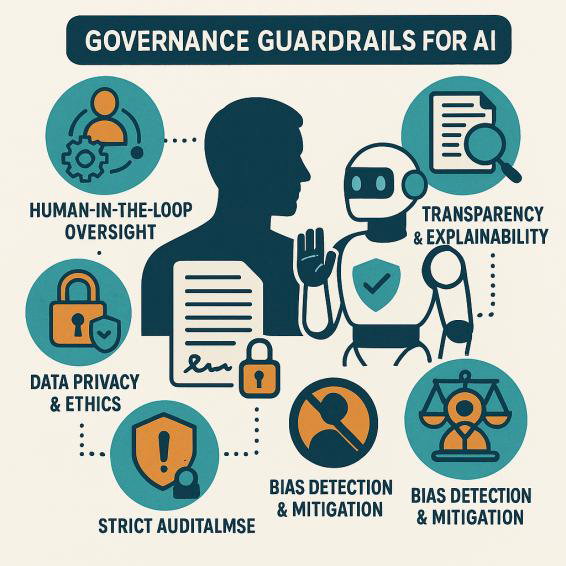

Figure 2: Governance Guardrails

Accountability ultimately rests with humans and the organizations deploying AI. Legal frameworks and emerging regulations underscore this principle: AI may assist, but it cannot absolve responsibility. Governance structures should define who reviews outputs, who signs off on decisions, and how accountability is documented. Audit trails and training reinforce the message that AI augments human judgment—it does not replace it.

To ensure AI amplifies human values, organizations need robust governance guardrails: human-in-the-loop oversight, transparency and explainability, bias detection and mitigation, strict data privacy, and ethical use aligned with organizational purpose. These measures build trust internally and externally, reducing risk while strengthening reputation.

The Next Five Years: What Success Will Look Like

Organizations that master human–AI partnership will share common traits. They will cultivate an augmentation-first culture, designing roles and workflows where AI enhances human strengths. They will invest in continuous learning ecosystems, embedding AI literacy and adaptive skills into professional development. Ethical and transparent governance will be woven into operations, making trust a competitive advantage. Their decision architectures will balance efficiency with judgment, keeping humans as final

authorities for high-stakes calls. Most importantly, they will harness AI for co-creation—using it not just to optimize but to imagine new products, services, and business models.

The call to action is clear: The future of work is not humans versus machines; it is humans and machines together. Leaders who redesign work, upskill people, enforce boundaries, and institutionalize trust will ensure AI magnifies human creativity, ethics, and judgment—rather than diminishing them. The time to act is now.